TempME: Towards the Explainability of Temporal Graph Neural Networks via Motif Discovery

Introduction

Temporal graphs are widely used to model dynamic systems with time-varying interactions. Despite the widespread success of Temporal Graph Neural Networks (TGNN), these models often lack transparency and function as black boxes. The goal of explainability is to discover what patterns in data have been recognized that trigger certain predictions from the model.

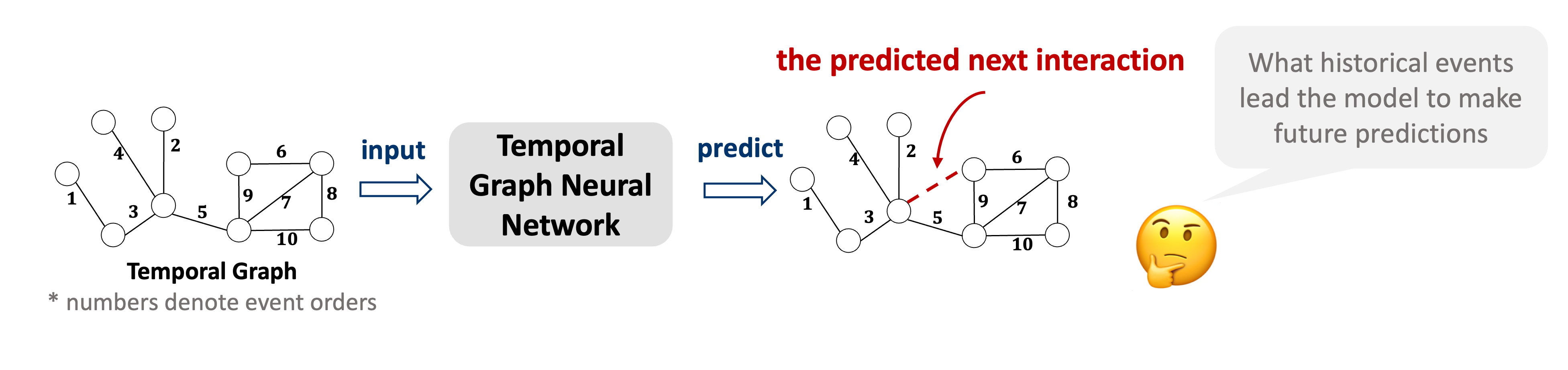

Figure 1: Explainability for temporal graph neural network

Important historical events should be temporally proximate and spatially adjacent to construct cohesive explanations, which provide human-intelligible insights and understanding. However, recent attempts at TGNN explainability all face the critical challenge of generating cohesive explanations.

Figure 1: Explainability for temporal graph neural network

Important historical events should be temporally proximate and spatially adjacent to construct cohesive explanations, which provide human-intelligible insights and understanding. However, recent attempts at TGNN explainability all face the critical challenge of generating cohesive explanations.

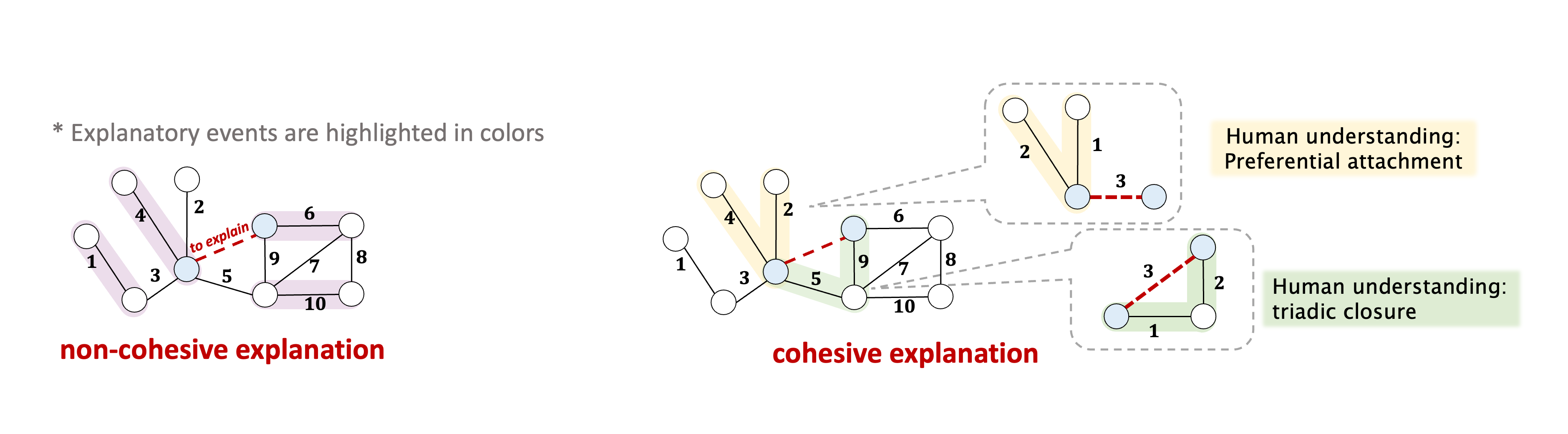

Figure 2: Cohesive Explanation

To address the aforementioned challenges of temporal explanations, we propose to utilize temporal motifs in the explanation task. Temporal motifs refer to recurring substructures within the graph. Recent studies demonstrate that these temporal motifs are essential factors that control the generative mechanisms of future events in real-world temporal graphs and dynamic systems. They are plausible and reliable composition units to explain TGNN predictions. Moreover, the intrinsic self-connectivity of temporal motifs guarantees the cohesive property of the generated explanations.

Figure 2: Cohesive Explanation

To address the aforementioned challenges of temporal explanations, we propose to utilize temporal motifs in the explanation task. Temporal motifs refer to recurring substructures within the graph. Recent studies demonstrate that these temporal motifs are essential factors that control the generative mechanisms of future events in real-world temporal graphs and dynamic systems. They are plausible and reliable composition units to explain TGNN predictions. Moreover, the intrinsic self-connectivity of temporal motifs guarantees the cohesive property of the generated explanations.

In this work, we propose TempME, a novel Temporal Motif-based Explainer to identify the most determinant temporal motifs to explain the reasoning logic of temporal GNNs. TempME leverages historical events to train a generative model that captures the underlying distribution of explanatory motifs. TempME is theoretically grounded by Information Bottleneck (IB), which finds the best tradeoff between explanation accuracy and compression. Different from previous works that only focus on the effect of singular events, TempME is the first to bring additional knowledge about the effect of each temporal motif.

Method

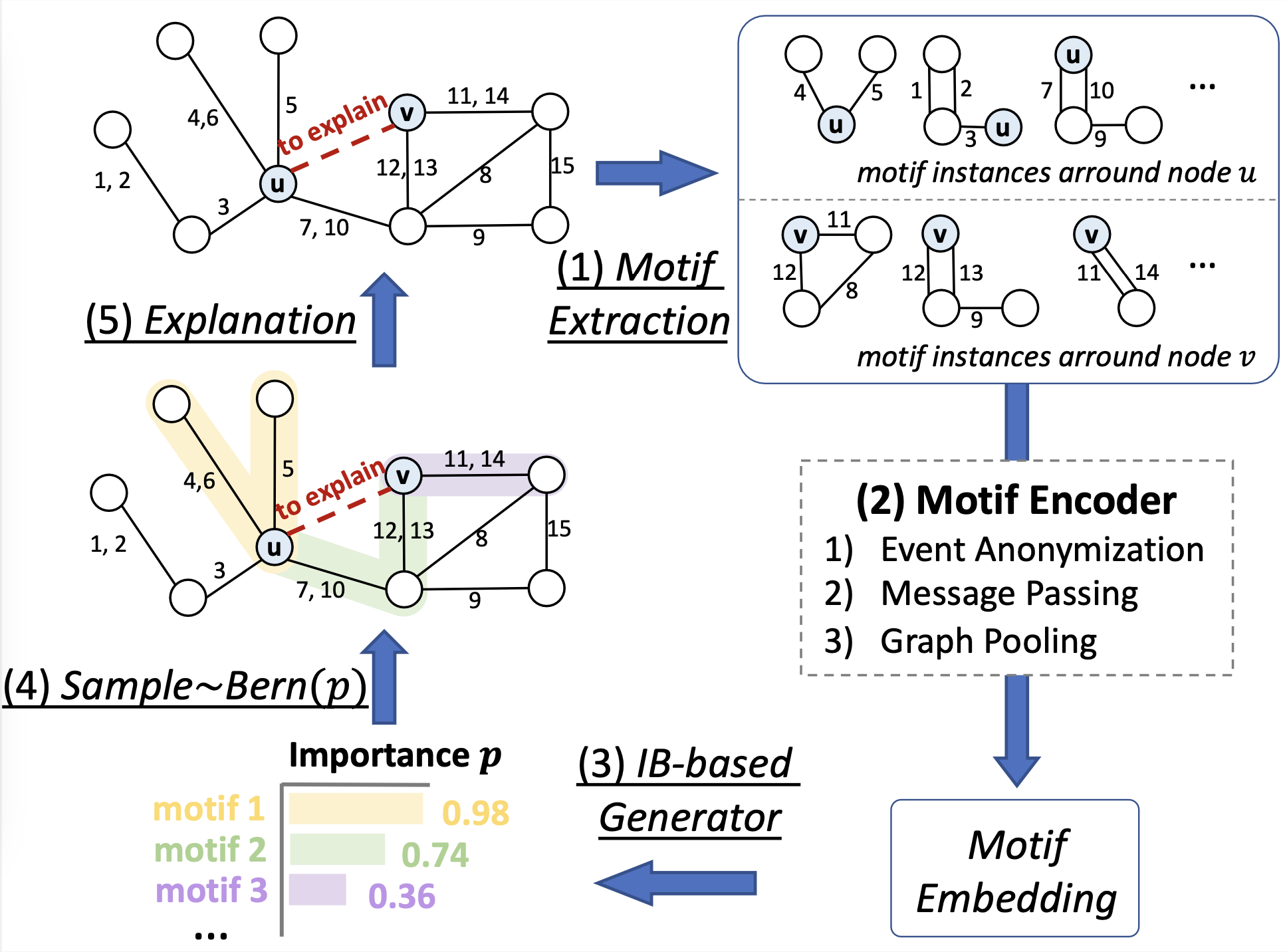

The pipeline of TempME is shown in Figure 3. Given a temporal graph and a future prediction between node and node to be explained, TempME first samples surrounding temporal motif instances. Then a Motif Encoder creates expressive Motif Embedding for each extracted motif instance. Based on Information-bottleneck (IB) principle, TempME characterizes the importance scores of these temporal motifs.

Figure 3: Architecture of TempME

Figure 3: Architecture of TempME

Temporal Motif Sampling

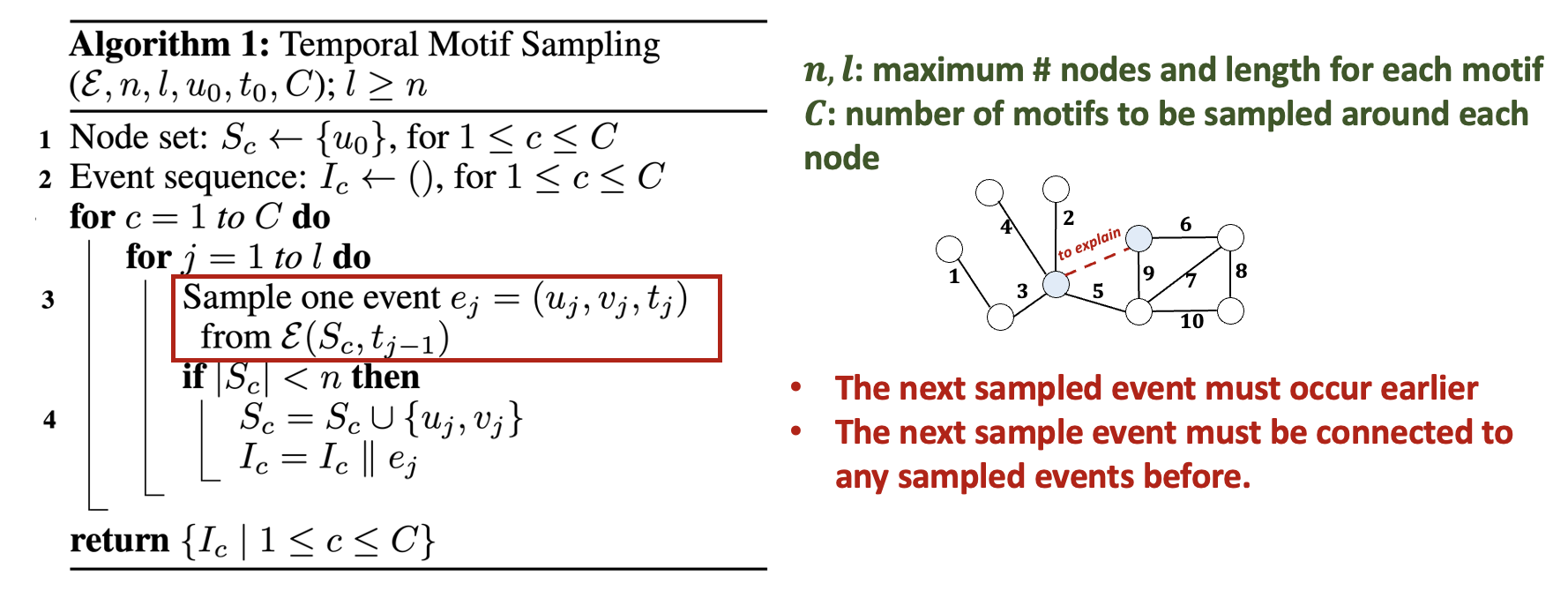

Given a temporal graph with historical events and node of interest at time , we sample retrospective temporal motifs with at most nodes and events, starting from . Following algorithm shows our Temporal Motif Sampling approach, where denotes the set of historical events that occur to any node in before time . At each step, we sample one event from the set of historical events and ensure that the sampled subgraph is self-connected.

Algorithm of temporal motif sampling

Algorithm of temporal motif sampling

Motif Encoder

We sample surrounding temporal motif instances starting from and , respectively, denoted as and . We design a Motif Encoder to learn motif-level representations for each surrounding motif in and . To maintain the inductiveness, we create structural features to anatomize event identities by counting the appearance at certain positions: (abbreviated as for simplicity) is a -dimensional structural feature of where the -th element denotes the number of interactions between and at the -th sampling position in . Given a motif instance with node set and event set , let denote the associated feature of node . denotes the event feature of event , where is the associated event attribute and with learnable parameters . We perform message passing to aggregate neighboring information and then apply the Readout function to pool node features. The importance score of the instance is predicted by an MLP module: . The final explanation can thus be induced by all sampled important motifs according to the importance scores.

Information-Bottleneck principle

Let and denote the explanation and the computational graph of event . The Information-Bottleneck objective maximizes mutual information with the target prediction while minimizing mutual information with the original temporal graph: where refers to the original prediction of event , is the regularization coefficient and is a constraint on the explanation size. The first term can be estimated with the cross-entropy between the original prediction and the output of base model given . We propose to formulate the second term as the mutual information between the original motif set and the selected important motif subset . We utilize a variational approximation to replace its marginal distribution and obtain the upper bound of with Kullback–Leibler divergence: where denotes all learnable parameters.

We provide two different prior distributions for : uniform and empirical. In the uniform setting, is the product of Bernoulli distributions with probability , that is, each motif shares the same probability being in the explanation. uniform setting ignores the effect of the null model, which is essentially a randomized version of the empirical network, generated by shuffling or randomizing certain properties while preserving some structural aspects of the original graph. In empirical setting, let denote equivalence classes of temporal motifs and is the sequence of normalized class probabilities occurring in with , where is the importance score of the motif instance . Correspondingly, we have denoting the sequence of normalized class probabilities in the null model. The prior belief about the average probability of a motif being important for prediction is fixed as . The final training objective becomes where measures the sparsity of the generated explanation. TempME shares spirits with perturbation-based explanations, where "interpretable components" corresponds to temporal motifs and the "reference" is the null model.

Experiments

Datasets and Baselines

We evaluate TempME on temporal graph neural network (TGNN) explainability using diverse datasets, including

- Wikipedia, Reddit: social networks with rich interaction attributes;

- Enron, UCI: temporal graphs without interaction attributes;

- Can.Parl., US Legis: political networks with single attributes.

We benchmark several base TGNNs (TGAT, TGN, GraphMixer) and compare various explainability baselines:

- attention-based approach: ATTN;

- gradient-based approach: Grad-CAM;

- static GNN explainers: GNNExplainer, PGExplainer;

- Temporal GNN explainer: TGNNExplainer.

Key metrics include accuracy, measuring the alignment between explanations and base model predictions, and a fidelity score assessing the impact of generated explanations on edge-specific predictions.

Explanation Accuracy and fidelity

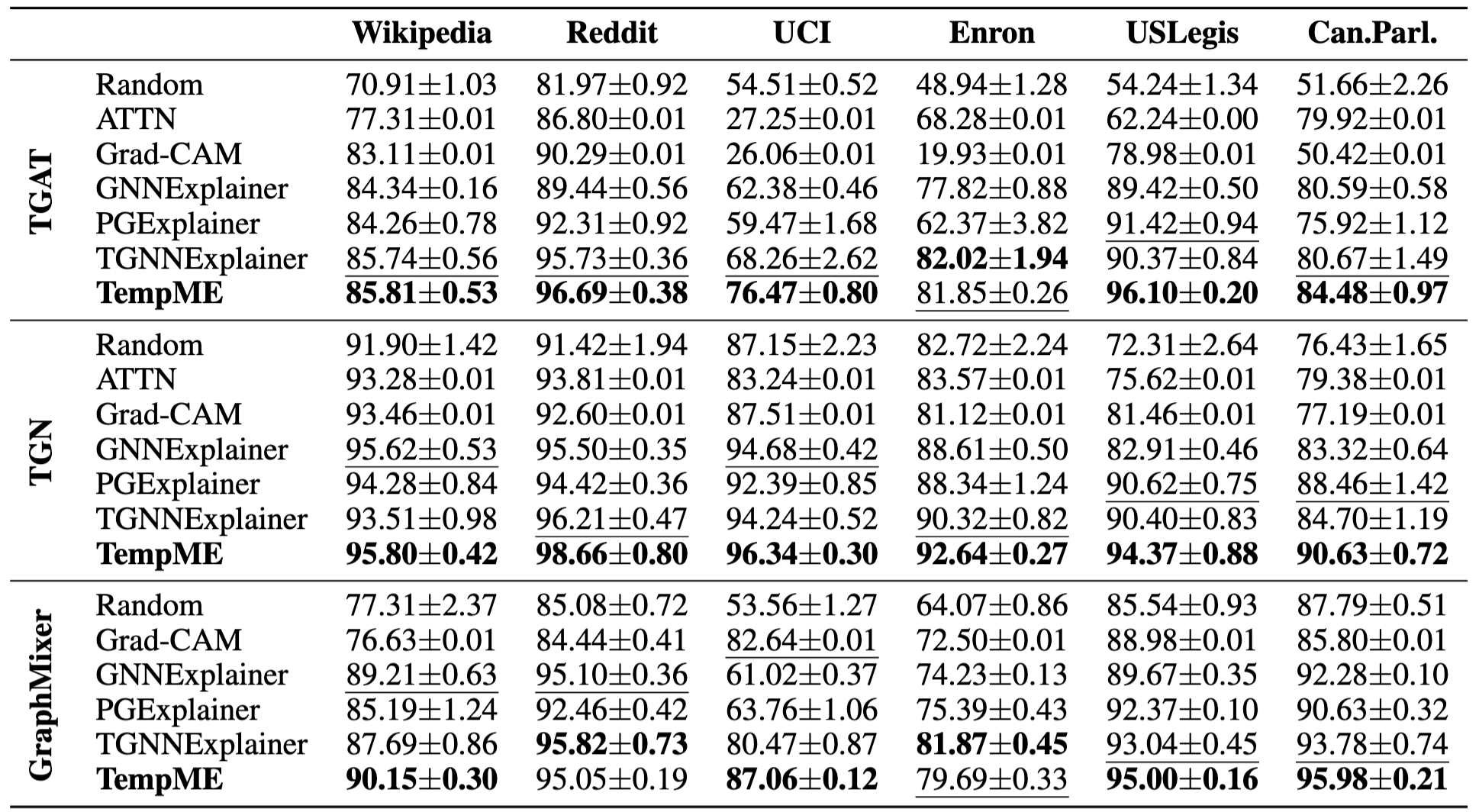

Table 1: Explanation ACC-AUC Comparison

Table 1 shows the explanation performance of TempME and other baselines w.r.t. ACC-AUC. We have the following observations:

Table 1: Explanation ACC-AUC Comparison

Table 1 shows the explanation performance of TempME and other baselines w.r.t. ACC-AUC. We have the following observations:

- The performance of baseline models exhibits considerable variation.

- ATTN and Grad-CAM work well on datasets with rich attributes, while yielding poor performances on unattributed datasets.

- The effectiveness of TempME is consistent across different datasets and different base models.

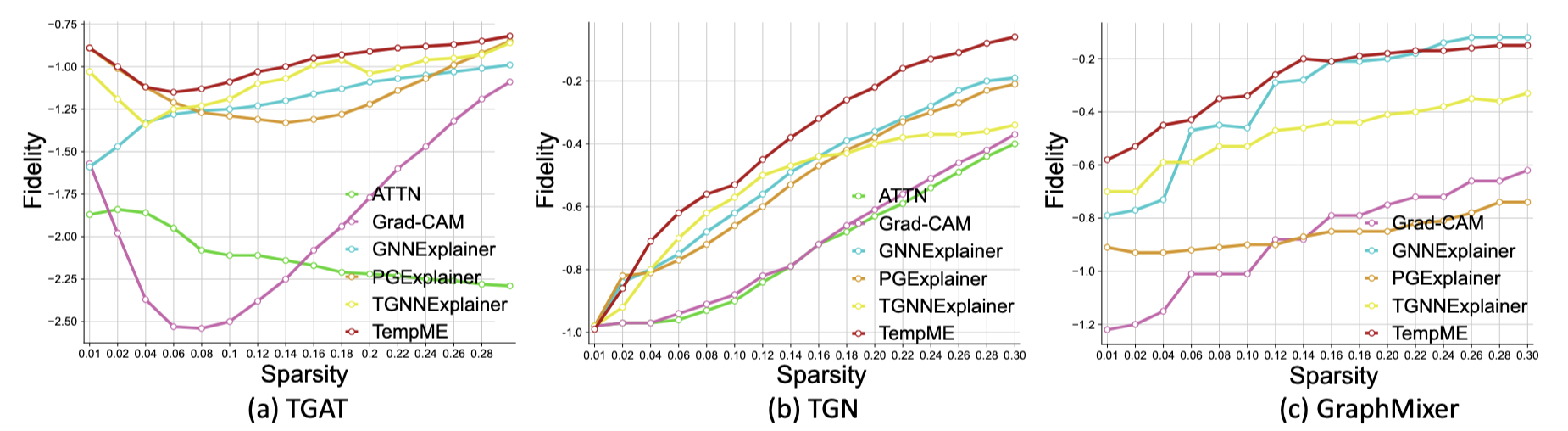

Figure 4: Fidelity-Sparsity Curves on Wikipedia dataset with different base models

From Figure 4, we observe that

Figure 4: Fidelity-Sparsity Curves on Wikipedia dataset with different base models

From Figure 4, we observe that

- TempME surpasses the baselines in explanation fidelity, especially with a low sparsity level.

- TGAT gives priority to a narrow subset (e.g., 1%) of historical events, while TGN and GraphMixer rely on a wider range of historical events.

Enhanced Link Prediction Performance

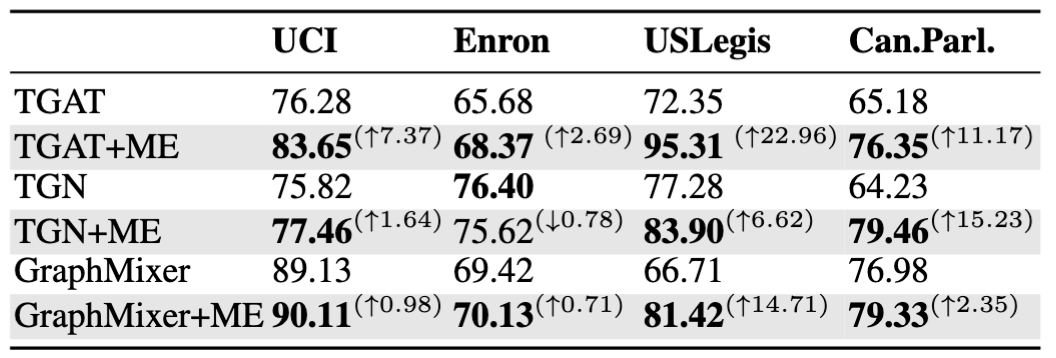

Table 2: Link prediction results (Average Precision) of base models with Motif Embedding (ME)

The performance of base models on link prediction with and without Motif Embeddings (ME) is shown in Table 2. Motif Embedding provides augmenting information for link prediction and generally improves the performance of base models. Notably, TGAT achieves a substantial boost, with an Average Precision of on USLegis, surpassing the performance of state-of-the-art models on USLegis.

Table 2: Link prediction results (Average Precision) of base models with Motif Embedding (ME)

The performance of base models on link prediction with and without Motif Embeddings (ME) is shown in Table 2. Motif Embedding provides augmenting information for link prediction and generally improves the performance of base models. Notably, TGAT achieves a substantial boost, with an Average Precision of on USLegis, surpassing the performance of state-of-the-art models on USLegis.

Uniform V.S. Empirical

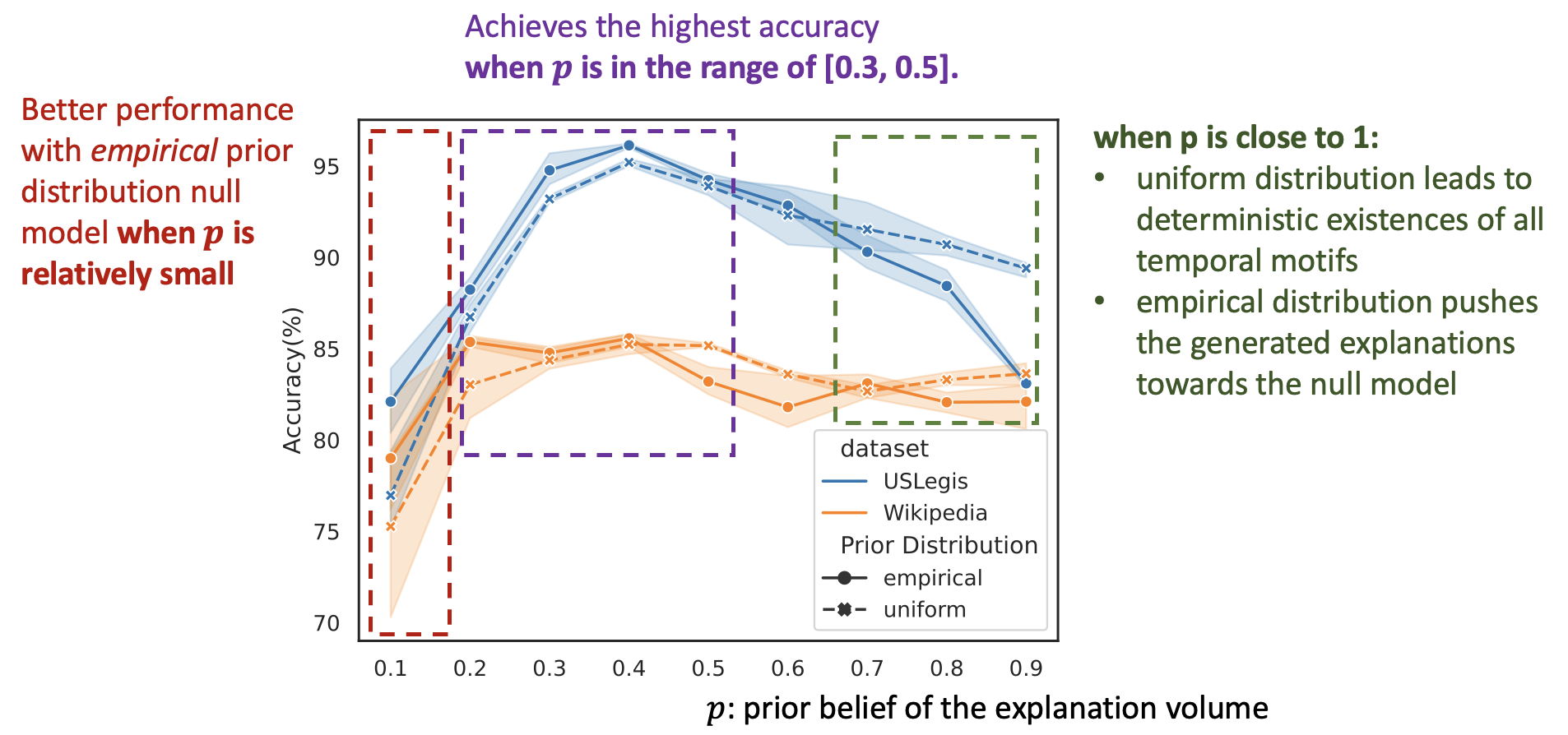

Figure 5: Comparison between uniform and empirical prior distribution

Figure 5: Comparison between uniform and empirical prior distribution

Figure 5 shows the comparison between uniform and empirical prior distribution in terms of ACC-AUC over sparsity levels from 0 to 0.3. TempME achieves the highest ACC-AUC when the prior belief of the explanation volume is in the range of . Notably, TempME performs better with empirical prior distribution when is relatively small, resulting in sparser and more compact explanations. This improvement can be attributed to the incorporation of the null model, which highlights temporal motifs that differ significantly in frequency from the null model. It is worth noting that when is close to 1, uniform prior distribution leads to deterministic existences of all temporal motifs while empirical prior distribution pushes the generated explanations towards the null model, which forms the reason for the ACC-AUC difference of empirical and uniform as approaches 1.